- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Sourcing Optimization

- C3 AI Supply Network Risk

- C3 AI Turnaround Optimization

- C3 AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Resources

- Generative AI

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can Generative AI’s Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

- What is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- C3 AI Live

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Best Practices in Ongoing Operations

Algorithm Maintenance and Support

Once deployed to production, model performance must be monitored and managed. Models typically require frequent retraining by data science teams. Some retraining tasks can be automated, such as automated retraining based on the availability of new data, new labels, or based on model performance drift.

However, for most real-world problems teams should be prepared for data scientists to put in ongoing work to understand the underlying reasons for model performance degradation or data deviations, and then to debug or seek to improve model performance over time.

Organizations therefore need to develop and apply significant technical agility to rapidly retrain and deploy new AI/ML models as circumstances and business requirements evolve.

Practitioners should be aware: All AI/ML models, if not actively managed, will hit the end of their useful life sooner than anticipated. Planning for that eventuality will ensure your business is taking advantage of AI models providing peak performance.

Model Monitoring

It is expected that the model performance will change over time. This is because business operations are dynamic, with constant changes to data, business processes, and external environments. The trained model is representative of a historical period that may or may not still be relevant. As new data are collected over time, training and updating the model will drive ongoing performance improvements.

Model drift and performance monitoring are critical for continued adoption of AI across the organization. Businesses may employ a variety of techniques to monitor model performance, including capturing data drift relative to a reference data set, deploying champion as well as challenger models to enable the “hot replacement” of one model with another as circumstances change, and creating model KPI dashboards to track performance.

Reference data sets provide a clear baseline of performance for trained models. They are often developed as part of the prototyping process, require a high degree of vetting, and establish a clear set of bounds in which the model must perform.

Champion/challenger methods are employed when there are multiple viable model solutions to an AI problem and there is a clear performance benchmark against which all models can be evaluated. When operating in production, one of multiple models is selected as the champion, and all or most of the predictions come from that model. Challenger models also operate in production, but often in shadow mode.

For example, a challenger model will make predictions at the same frequency as the champion model but may never be exposed to a user or downstream service. If the underlying data change, or if retraining impacts the models, the challenger may perform better against the predefined benchmark and either alert a user to promote it to be the new champion, or do so automatically.

Most importantly, as we have scaled AI systems at C3 AI, we have emphasized the need to present executives, business users, and data scientists with up-to-date views on model performance. These insights, continually updated, provide clarity and transparency to support user adoption and highlight potential emergent issues.

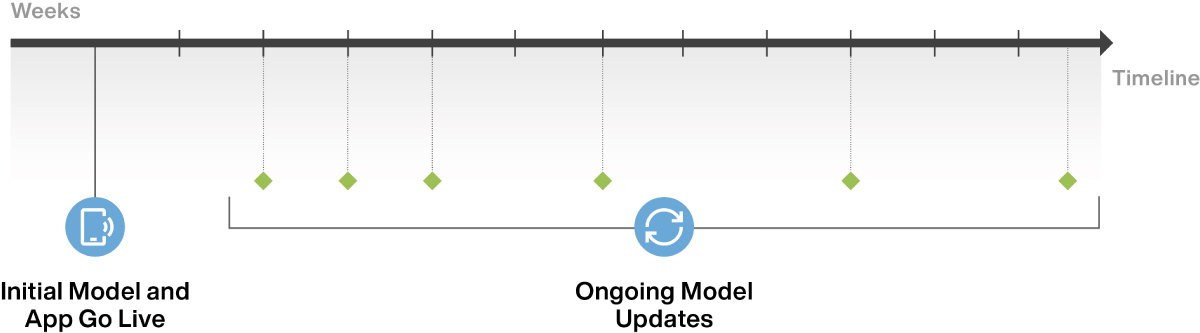

Model Updates

In practice, machine learning models may be retrained when new, relevant data are available. Retraining can occur continuously as new data arrive or, more commonly, at a regular interval. It can also occur in an automated manner or with a human in the loop to verify training data and performance metrics.

In our experience, retraining models at a regular interval or upon availability of a certain amount of new data balances the tradeoff between high costs associated with compute time of retraining and declining model performance over time.

Figure 38 Ongoing model retraining and updates drive continuous improvement and ensure that predictions remain relevant and accurate.